How to Balance AI and Human Input in Hiring

scale.jobs

December 29, 2025

AI in hiring is transforming recruitment, but it’s not without challenges. Combining AI's efficiency with human judgment creates a balanced and fair process. Here’s what you need to know:

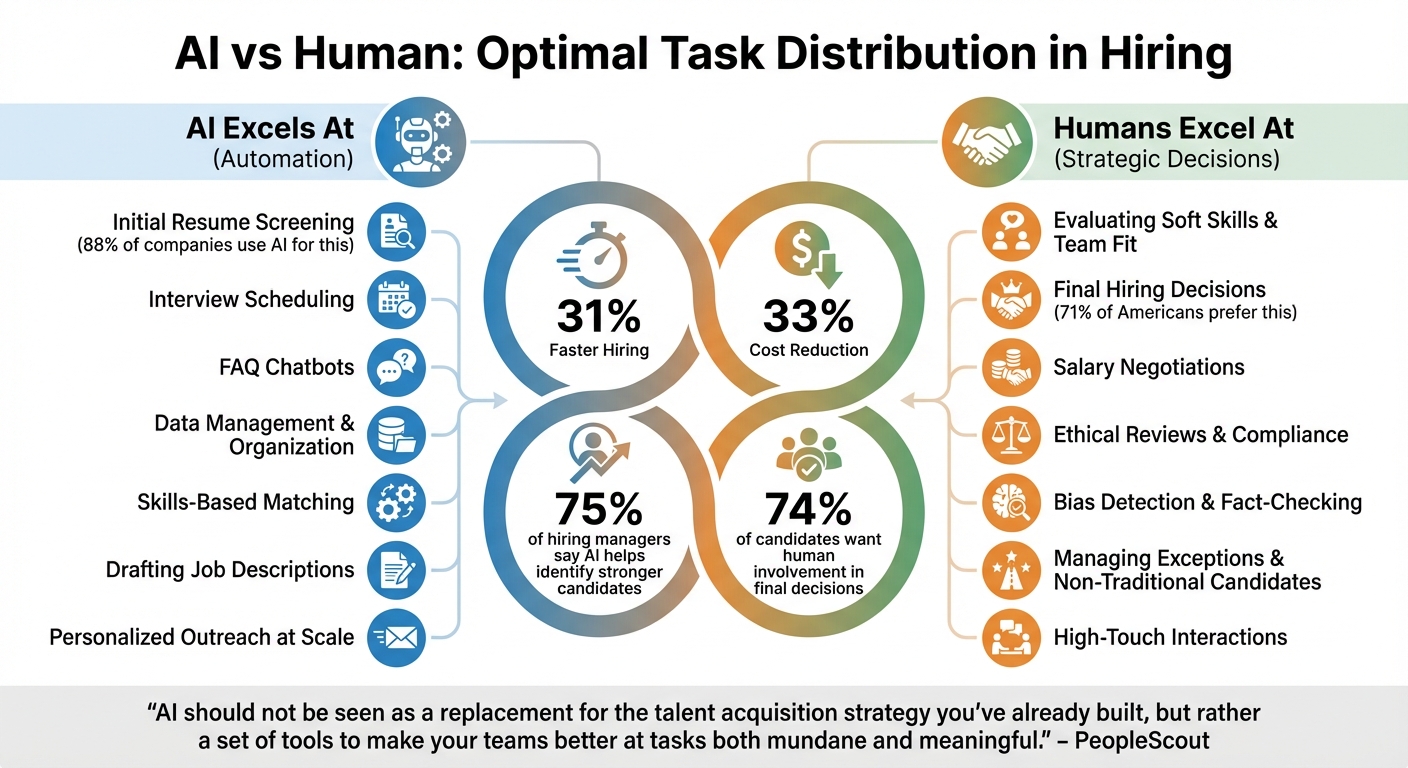

- AI excels at repetitive tasks like screening resumes, scheduling interviews, and managing data. It speeds up hiring by 31% and reduces costs by 33%.

- Human oversight is critical for final decisions, assessing soft skills, and ensuring compliance with anti-discrimination laws.

- Bias and legal risks exist: AI can amplify biases from historical data, leading to unfair outcomes. For example, some systems have disadvantaged Black male candidates or older applicants.

- Candidates value speed but want human interaction: 82% appreciate faster processing, but 74% prefer human involvement in final decisions.

- Best practices for hybrid workflows: Use AI resume builders for routine tasks but keep humans in charge of nuanced decisions. Implement checkpoints, conduct regular bias audits, and train recruiters to spot issues.

Key takeaway: AI should support - not replace - human recruiters. A thoughtful mix of automation and human input ensures more efficient, ethical, and effective hiring.

Hiring Rewired: Human Intelligence in the AI-Driven Job Market

Risks and Limitations of AI in Hiring

AI can make the hiring process faster and more efficient, but relying on it without human oversight can create serious problems. When companies hand over too much control to algorithms, they risk reinforcing biases, violating legal requirements, and missing out on great candidates who don’t fit neatly into predefined data patterns. These challenges highlight the importance of combining AI tools with human judgment in recruitment.

Bias and Discrimination Risks

One of the biggest risks with AI in hiring is that it can inherit and amplify historical biases. For instance, if a company has historically hired more men for engineering roles, an AI system might assume that being male is somehow linked to success in engineering, rather than focusing on actual qualifications. A notable example occurred in 2023 when iTutorGroup settled a $365,000 lawsuit with the EEOC. Their hiring algorithm had been programmed to automatically reject female applicants aged 55 or older and male applicants aged 60 or older, leading to significant reforms and government oversight.

Bias becomes even more complicated when multiple factors intersect. Research on open-source language models in 2024 revealed that these systems favored white-associated names 85% of the time and female-associated names only 11%, despite identical qualifications. In some simulations, Black men were disadvantaged in up to 100% of cases when AI was used for resume screening. This kind of bias often influences human recruiters too - studies show they follow AI recommendations 90% of the time, a phenomenon known as "automation bias."

AI tools that analyze facial expressions, speech patterns, or voice data also struggle with accuracy for certain groups, leading to what’s sometimes called "technical discrimination." For example, candidates who lack technological proficiency - often older individuals, people with disabilities, or those from lower-income backgrounds - may be unfairly penalized by automated systems. These biases not only harm individuals but also create legal and ethical challenges for companies.

Legal and Compliance Concerns

Bias issues often lead directly to legal risks. Without proper oversight, AI systems can violate Equal Employment Opportunity laws and other regulations. Alarmingly, 21% of companies currently use AI to automatically reject candidates at all stages of the hiring process without any human review, a practice that can result in significant legal liabilities. In the UK, for example, introducing technology that disadvantages applicants with protected characteristics may breach the Equality Act 2010. Meanwhile, in the U.S., regulations like New York City's Local Law 144 now require annual bias audits for automated hiring tools.

Another challenge is the lack of transparency in many AI systems, often referred to as "black boxes." These systems make decisions that are difficult to explain, which can conflict with transparency requirements. For instance, Article 22 of the UK GDPR gives individuals the right to challenge decisions made solely by automated processes. Similarly, the upcoming EU AI Act will classify recruitment AI tools as high-risk, requiring stricter oversight.

"If someone is going to use AI, which is inevitable, you have to keep in mind the various laws that apply to each situation."

- Helen Bloch, Law Offices of Helen Bloch, P.C.

Data privacy is another critical concern. Feeding personally identifiable information (PII) into public or external AI models risks data leaks and non-compliance with laws like GDPR and CCPA. Generative AI also has a tendency to produce "hallucinations" - plausible but false information - which could cause legal trouble if shared with candidates without proper fact-checking. Despite these risks, over 80% of employees report that their organizations have not provided any training on how to use generative AI tools responsibly.

Missing Human Empathy and Context

AI also lacks the ability to understand human context and nuance, which are essential in recruitment. It can only analyze data patterns, often missing the bigger picture. For example, strict "knockout" questions might automatically disqualify a highly qualified candidate over a minor detail that a human recruiter would consider irrelevant. Similarly, AI models might use factors like ZIP codes or graduation years as proxies, unintentionally disadvantaging candidates who took career breaks for reasons like parental leave.

"AI cannot replace human evaluation to ensure candidates meet certain qualifications requiring empathy and leadership competencies to name a few."

- Alison Stevens, Senior Director of HR Solutions, Paychex

While AI offers speed and efficiency, it simply cannot replicate the nuanced understanding and empathy that human recruiters bring to the table. Balancing technology with human insight is key to making fair and effective hiring decisions.

Setting Clear Roles for AI and Human Recruiters

AI vs Human Roles in Hiring: Task Distribution and Best Practices

Striking the right balance between AI and human involvement in recruiting means assigning tasks based on their respective strengths. AI shines in managing repetitive, time-consuming tasks, while humans bring essential qualities like judgment, empathy, and context to more nuanced decisions. By aligning responsibilities this way, you can create a hiring process that is both efficient and mindful of potential biases.

Using AI for Repetitive Tasks

AI is tailor-made for handling routine tasks that would otherwise drain hours of a recruiter's time. Take initial resume screening as an example - AI can sift through thousands of resumes in minutes, identifying candidates who meet specific requirements like skills, education, and experience. It’s no wonder that 88% of companies already rely on AI for this step. By automating such tasks, recruiters can focus on more strategic responsibilities.

Beyond screening, AI can also take care of scheduling interviews, answering FAQs through chatbots, and even drafting job descriptions or interview questions. It can personalize outreach messages to passive candidates at scale, saving countless hours.

Another key area where AI excels is data management. It organizes unstructured information within applicant tracking systems (ATS), preventing them from becoming chaotic and unmanageable. AI can also enable skills-based matching, analyzing detailed skill sets across profiles instead of relying on outdated filters like job titles or educational institutions.

"AI should not be seen as a replacement for the talent acquisition strategy you've already built, but rather a set of tools to make your teams better at tasks both mundane and meaningful."

- PeopleScout

Human Oversight for Key Decisions

While AI handles the tedious tasks, humans are indispensable for decisions that require nuance and judgment. For example, evaluating soft skills and team fit is something AI simply cannot do effectively. Traits like empathy, enthusiasm, and a candidate's potential to integrate with a team are critical to long-term success but fall outside the scope of what algorithms can assess.

Final hiring decisions should always remain in human hands. While 75% of hiring managers acknowledge that AI helps them identify stronger candidates, 71% of Americans are against AI making the ultimate hiring call. This isn’t just about public opinion - it’s about ensuring accountability and understanding context. Humans can consider factors that AI might overlook, like a candidate’s growth potential or a unique perspective that could enrich the team.

Humans also take the lead in high-touch interactions, such as salary negotiations and ethical reviews. They play a crucial role in fact-checking AI outputs, identifying potential biases, and ensuring compliance with equality and anti-discrimination laws.

"The tool should elevate human judgment, not replace it."

- Machine Hiring

Another important human role is managing exceptions. AI can unintentionally filter out candidates with non-traditional career paths, disabilities, or limited tech skills. A manual review process for these cases ensures that qualified candidates aren’t overlooked due to algorithmic blind spots.

Adding Human Oversight and Ethical Protocols

Once you've established clear roles for AI and humans in your hiring process, it's time to weave in essential checkpoints. These checkpoints, along with bias training and defined protocols, ensure your recruitment process remains efficient without compromising fairness. By combining AI's speed with human judgment, you create a system that prioritizes ethical hiring practices.

Setting Up Review Points

Think of review points as quality control stops where humans must give the green light before the process moves forward. These checkpoints ensure AI outputs are carefully vetted. For instance, before publishing an AI-generated job description, a recruiter must confirm that the language is inclusive. Similarly, before presenting a candidate shortlist to hiring managers, someone should verify that the AI hasn't unintentionally excluded qualified applicants from particular demographic groups.

This method, known as "Human-in-the-Loop" (HITL), is becoming a common standard. Interestingly, only 21% of companies currently allow AI to reject applicants without human review. This means most organizations understand the need for oversight, even if implementation isn't always flawless. The solution? Make these review points mandatory - not optional. Configure your applicant tracking system so that no AI-generated output advances without human approval.

Additionally, provide alternative pathways for candidates who might face challenges with AI systems. For example, flag applications for manual review if candidates indicate disabilities or language barriers. In the UK, the Equality Act 2010 mandates reasonable adjustments for disabled applicants, and AI systems should support this by allowing manual intervention when needed.

Training Recruiters to Spot Bias

Your team needs the skills to identify and address bias in AI outputs. AI systems can introduce bias in two key ways: "Learnt Bias" (carried over from historical hiring data) and "Inaccuracy Bias" (where tools like voice or facial analysis may perform poorly for certain groups). Recruiters must be trained to recognize both.

One effective method is using the Implicit Association Test (IAT). Studies reveal that recruiters who take the IAT immediately before screening candidates are 13% more likely to select candidates who defy stereotypes. This exercise raises awareness of unconscious biases, which is crucial since human recruiters tend to follow AI's biased recommendations up to 90% of the time.

Training should also include "prompt fluency" and data literacy. Recruiters need to know how to question AI predictions, spot misleading outputs or "hallucinations", and override automated decisions when necessary. As Moninder Singh, Senior Research Scientist at IBM, emphasizes:

"An end user of a hiring tool must similarly bring in diversity at every step."

Consider setting up an AI Ethics Committee with members from HR, Legal, and Tech teams. This committee can evaluate AI use cases, ensure compliance, and handle escalations when recruiters identify potential issues. It's also important to provide candidates with a way to contest automated decisions and establish internal channels for addressing AI-related concerns.

Automation vs. Augmentation: Comparison Table

Understanding the distinction between automation and augmentation can help clarify where human oversight is most impactful. Here's a side-by-side comparison:

| Feature | Automation-Focused (Speed & Scale) | Augmentation-Focused (Human-Centered) |

|---|---|---|

| Primary Goal | Efficiency and cost reduction | Equity and quality of hire |

| AI Role | Handling repetitive tasks (e.g., scheduling, initial filtering) | Providing insights and predicting candidate potential |

| Human Role | Monitoring outputs for errors | Making final decisions and building relationships |

| Risk Profile | Higher risk of over-automation and impersonal processes | Lower risk; human judgment mitigates bias |

| Candidate Experience | Fast and available 24/7, but can feel impersonal | More personalized, empathetic, and open to feedback |

The augmentation model positions AI as the "backstage crew" - handling routine tasks and organizing data - while humans take center stage for strategic decisions and meaningful interactions. This balance reduces bias risk and delivers a more thoughtful, human-centered hiring experience for candidates.

Running Regular Audits and Making Improvements

Checkpoints are just the beginning. Regular audits ensure your AI processes stay aligned, catching biases and preventing overreliance on automated outputs.

Bias and Fairness Audits

Consistently scheduled bias audits are essential - monthly for high-volume roles or quarterly for general reviews. These audits evaluate both inputs and results to uncover discriminatory patterns affecting protected groups such as gender, race, age, or disability. Keep an eye on metrics like selection rates, false positives, and false negatives for each demographic. For instance, if your AI system rejects 40% of male applicants but 60% of female applicants with similar qualifications, that’s a clear warning sign.

Leverage tools like IBM Fairness 360, Fairlearn, or Holistic AI to assess your model's treatment of different groups. These platforms can detect subtle biases that might not be immediately apparent. Document every audit thoroughly, including findings and corrective actions. This isn't just good practice - laws like Illinois’s HB 3773 and Colorado’s SB 24-205, taking effect in 2026, will require such transparency.

Tracking Performance Metrics

Fairness is critical, but it’s also crucial to measure whether your AI-human workflow is delivering better hiring outcomes. Start by tracking efficiency metrics: AI-enhanced hiring processes often reduce time-to-hire by about 33%, cutting the average duration from 42 days to 28. Monitor cost-per-hire as well, as AI can lower this by approximately 30%, saving around $1,400 per hire.

However, speed and cost savings mean little if the hires don’t perform well. Use quality of hire as a benchmark, assessing how new employees perform and their retention rates. While AI models can predict job performance with 78% accuracy and retention likelihood with 83% accuracy, it’s vital to compare these predictions against real-world outcomes. Regularly match AI-generated scores with human evaluations to ensure the system maintains precision.

Track human intervention rates too - how often recruiters step in to override AI decisions. If overrides are frequent, it could signal flaws in the model or its training data. On the flip side, if recruiters never question AI recommendations, it might indicate they’re relying too heavily on automation - a behavior research suggests happens up to 90% of the time. The ideal balance lies in allowing AI to handle routine tasks while leaving nuanced decisions to human judgment. These metrics feed into a continuous improvement loop that sharpens your hybrid hiring strategy over time.

Making Ongoing Improvements

With regular audits and bias training in place, your recruitment process can remain fair and efficient. When bias is identified, act swiftly to adjust. Post-processing techniques - like reweighting recommendations or modifying scores - can help. For example, if your AI undervalues candidates from non-target universities, consider refining the training data or introducing blind screening features that remove educational details during initial evaluations.

Take Unilever as an example. They implemented AI-driven games and video interviews to screen over 250,000 applicants annually. By 2021, they saved over 100,000 hours of recruiter time in a single year and increased hires from non-target universities by 16%. This demonstrates how thoughtfully applied AI can boost both efficiency and diversity.

To maintain consistency, create a cross-functional AI Ethics Committee with members from HR, Legal, and Tech teams. This group should oversee audit results, approve system updates, and address any escalated concerns. Quarterly meetings can help review metrics, resolve issues, and refine protocols. Remember, AI systems aren’t "set it and forget it." They demand ongoing adjustments, human oversight, and a commitment to fairness that goes beyond mere compliance. This iterative approach ensures your hiring process balances efficiency with the human touch.

Creating a Hybrid Workflow with Tools and Platforms

How Technology Supports Hybrid Workflows

An effective hybrid recruitment strategy hinges on the right platform. Hybrid platforms blend the speed of AI with the nuanced insight of human judgment. One critical function these platforms excel at is data standardization - transforming unstructured information into a format that seamlessly integrates with Applicant Tracking Systems (ATS). This process helps tackle the classic "garbage in, garbage out" problem that often plagues recruitment teams.

Integration is another cornerstone of success. Whether you opt for native, external, or embedded AI solutions, the goal is to enhance your existing ATS without unnecessary friction. A compelling example comes from a March 2025 study by Stanford and USC researchers in collaboration with micro1. They found that their AI-led interview system achieved a 53.12% success rate in final human interviews, compared to just 28.57% for traditional resume screening. Even more striking, the system reduced financial costs by a whopping 87.64%. This kind of streamlined integration not only delivers measurable results but also ensures a recruitment process that is both efficient and equitable.

Why scale.jobs Works for Balanced Hiring

When it comes to hybrid workflows, scale.jobs stands out as a prime example of balanced hiring practices. Unlike competitors such as Jobscan and LazyApply, scale.jobs offers a true hybrid approach that effectively combines the precision of AI with the reliability of human oversight.

One of the platform's standout features is its human-powered application submission service. Trained virtual assistants handle application submissions, bypassing bot detection systems that could otherwise flag and reject applications. This is particularly crucial considering that 21% of companies automatically reject candidates without any human review. On top of this, scale.jobs provides real-time WhatsApp updates, complete with timestamped work screenshots, ensuring full transparency throughout the process. This level of communication is vital, especially when 74% of candidates still prefer human interaction during the final stages of hiring.

The platform’s pricing model also reflects its hybrid philosophy. Instead of locking users into recurring subscription plans like Teal HQ or Resume Genius, scale.jobs offers flat-fee campaign bundles, starting at $199 for 250 applications. This straightforward pricing gives users flexibility and value without hidden costs. Additionally, the platform supports all types of job portals, from major corporate ATS systems to niche job boards. It even includes free tools like an ATS resume checker and an interview question predictor.

For organizations looking to strike the right balance between AI-driven efficiency and the human touch, scale.jobs provides a seamless solution. Its combination of advanced technology and human verification ensures a hiring process that is both effective and personalized, paving the way for better long-term hiring outcomes.

Conclusion

Combining AI with human oversight in hiring is essential for creating a process that is efficient, fair, and compliant with legal standards. Research shows that AI tools can lead to 31% faster hiring and 50% higher-quality hires, but 71% of Americans oppose AI-only decisions in recruitment. This makes a hybrid approach not just practical but necessary.

The key lies in defining roles clearly. AI can take on repetitive, high-volume tasks like resume sorting, initial screenings, and scheduling interviews. Meanwhile, humans can focus on assessing soft skills, evaluating fit within the team, and making final hiring decisions. This balanced approach has been shown to reduce hiring bias by 56-61% while keeping candidates' preference for human interaction intact.

To ensure fairness and accuracy, it’s important to establish checkpoints where recruiters can review AI outputs, conduct bias audits every six months, and train staff to identify issues like algorithmic drift. As Utkarsh Amitabh and Ali Ansari from the World Economic Forum explain:

"Human oversight helps refine AI-driven processes, ensuring fairness and mitigating potential biases."

This balance is already being implemented by tools like scale.jobs, which merge AI-powered resume optimization with human oversight. Their system bypasses bot filters and provides transparency through real-time WhatsApp updates and timestamped screenshots. With flat-fee pricing starting at $199 for 250 applications, they eliminate hidden costs while maintaining accountability.

FAQs

How can companies prevent AI from perpetuating biases in hiring?

To ensure AI doesn't amplify biases in hiring, companies must prioritize fairness and transparency when developing their AI tools. This starts with a thorough audit of historical hiring data to identify biases linked to gender, race, or ethnicity. Any variables that could unintentionally perpetuate discrimination should be either removed or adjusted. Businesses should also leverage explainability tools to understand how candidates are ranked or filtered, enabling recruiters to spot and address any problematic patterns.

Human oversight plays a crucial role in this process. Recruiters should carefully review AI-generated recommendations, ensuring AI is used for tasks like skill-based matching rather than making final decisions. Training hiring managers to recognize and mitigate bias is equally important. By letting AI handle efficiency-focused tasks while humans evaluate factors like potential and team compatibility, companies can strike a balance between fairness and productivity.

Regular monitoring and audits are also key. Tracking fairness metrics, updating AI systems with diverse datasets, and conducting bias assessments before deploying major updates can help keep the process accountable. By combining clean data, transparency, human judgment, and consistent evaluations, businesses can harness AI’s potential responsibly - without reinforcing historical hiring biases.

What legal steps should companies take when using AI in hiring?

When incorporating AI into recruitment processes, businesses must tread carefully to comply with employment and data privacy laws, ensuring they sidestep potential legal pitfalls.

Anti-discrimination laws, such as the U.S. Equal Employment Opportunity Act (Title VII), mandate that AI-driven hiring tools must not exhibit bias based on race, gender, age, disability, or other protected traits. To meet these standards, companies should conduct regular bias testing and maintain human oversight. These steps help promote fairness and minimize the risk of unintentional discrimination.

On the data privacy front, regulations like the California Consumer Privacy Act (CCPA) and the General Data Protection Regulation (GDPR) set strict guidelines. Organizations must safeguard candidate data, obtain explicit consent for its use, and offer candidates the ability to correct or delete their information. Transparency is crucial - candidates should know when AI is part of the decision-making process and receive clear explanations of outcomes. Maintaining thorough records of AI decisions and any human involvement can further demonstrate accountability and adherence to legal requirements.

By embedding these practices, companies can responsibly leverage AI in hiring while staying firmly within the bounds of the law.

Why is human oversight important in AI-powered hiring?

Human involvement plays a crucial role in making sure AI-powered hiring systems stay fair, ethical, and in line with anti-discrimination laws. Without this oversight, AI can unintentionally reinforce biases, miss subtle but important qualities in candidates, or make decisions that lack proper context.

Research highlights how biased algorithms can skew hiring outcomes, often giving unfair advantages to certain groups. Human reviewers are key in spotting and addressing these issues. They can evaluate unique circumstances like career breaks or transferable skills and ensure hiring decisions reflect both legal requirements and company values.

By blending AI's speed and precision with human insight, companies can build a hiring process that's not only efficient but also fair - earning the trust of both candidates and hiring teams.