Top 10 Machine Learning Case Studies for Resumes

scale.jobs

January 10, 2026

Machine learning projects on your resume can set you apart by demonstrating your ability to solve business problems and deliver measurable results. From fraud detection to predictive maintenance, these case studies highlight skills employers value, including deployment, monitoring, and achieving tangible outcomes.

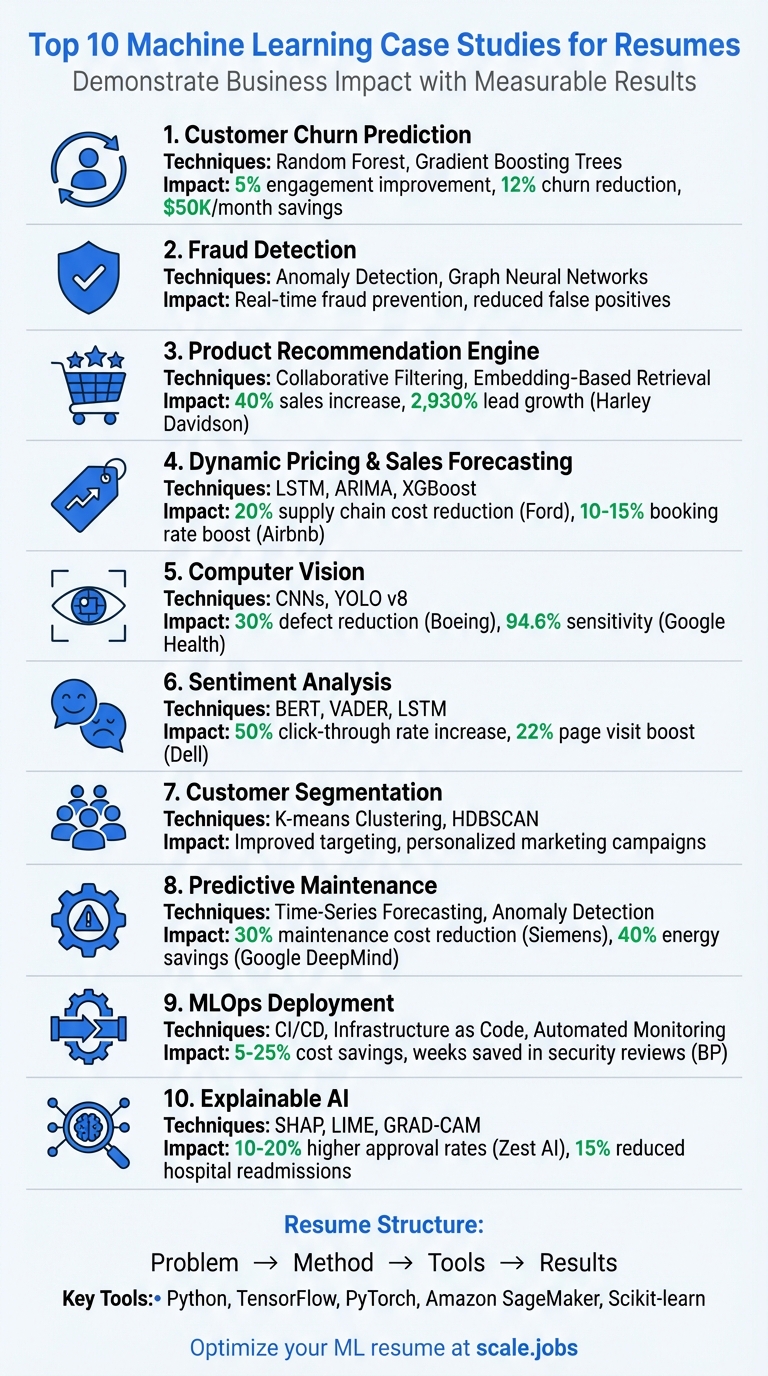

Here’s a quick overview of the 10 key ML case studies to include:

- Customer Churn Prediction: Reduce subscription cancellations using algorithms like Random Forest and Gradient Boosting Trees.

- Fraud Detection: Prevent financial losses with anomaly detection and Graph Neural Networks.

- Product Recommendation Engines: Boost e-commerce sales with techniques like collaborative filtering and embedding-based retrieval.

- Dynamic Pricing & Sales Forecasting: Optimize pricing and inventory using time series models like LSTM and ARIMA.

- Computer Vision: Detect defects or classify images with CNNs and YOLO for industries like manufacturing and healthcare.

- Sentiment Analysis: Analyze customer feedback with NLP models like BERT and VADER.

- Customer Segmentation: Group customers using K-means clustering for personalized marketing.

- Predictive Maintenance: Prevent equipment failures with IoT data and time-series forecasting.

- MLOps Deployment: Build scalable pipelines for deploying and monitoring ML models.

- Explainable AI: Ensure transparency in high-stakes domains like finance and healthcare with SHAP and LIME.

To stand out, structure your case studies with Problem → Method → Tools → Results and include metrics to showcase the impact, such as cost savings, revenue growth, or accuracy improvements. Tools like Python, Amazon SageMaker, TensorFlow, and PyTorch are frequently used. Platforms like scale.jobs can help optimize your resume for ATS systems, ensuring your ML achievements make a lasting impression.

Top 10 Machine Learning Case Studies for Resumes with Key Metrics

4 Real Machine Learning Projects That Get You Hired - No More Tutorials!

How to Add Machine Learning Case Studies to Your Resume

Where you place your machine learning (ML) case studies on your resume depends on how you gained the experience. If you worked on paid projects, list them under Experience and emphasize the business outcomes and teamwork involved. For personal projects, include them in a Projects section with direct links to your GitHub repository. Academic work that resulted in a published paper or a novel algorithm should go under Research, highlighting publications and experimental achievements.

Each case study should follow a clear four-part structure: Problem → Method → Tools → Results. For example: “Predicted customer churn using Random Forest and achieved a 12% reduction, saving $50K/month.” This format not only aligns with how hiring managers assess candidates but also ensures your resume passes applicant tracking systems (ATS) more effectively than vague descriptions.

Make sure to include links to your GitHub, Kaggle, or portfolio so recruiters can evaluate your code and implementation. Use strong action verbs like "developed", "built", or "deployed" to start each bullet point, and keep your points concise and scannable. Save your resume as a PDF using professional fonts like Calibri or Didot to ensure consistent formatting across devices.

To simplify this process, platforms like scale.jobs offer tools to optimize your resume for ATS while incorporating human expertise. Their free ATS-compliant Resume Builder and AI Assistant Pro (available at a $9/month launch price) can create tailored resumes for each job application with just one click. Unlike competitors like Huntr ($30/month), Rezi AI ($29/month), or Teal ($29/month), scale.jobs combines automation with real-time feedback from human experts via WhatsApp. This hybrid approach addresses issues automated tools might miss.

Here’s what sets scale.jobs apart:

- AI-tailored resumes for individual applications in a single click

- Expert review via WhatsApp, ensuring proper formatting and structure

- Flat-fee pricing starting at $199 for 250 applications, avoiding recurring subscriptions

- Proof-of-work screenshots and refunds for unused credits for added transparency

- Visa compliance support for ML professionals handling H-1B, OPT, or TN requirements

These features make it easier to craft a polished, professional resume that stands out in the competitive ML job market.

1. Customer Churn Prediction for Subscription Businesses

Business problem addressed

For subscription companies, every canceled membership represents lost revenue. Churn prediction plays a critical role in minimizing these losses by identifying customers who are likely to cancel before they actually do. This insight allows businesses to proactively target at-risk subscribers with retention strategies. Key indicators often include declining usage, unresolved billing issues, or frequent service complaints. By uncovering these patterns, companies can use advanced machine learning (ML) techniques to take preemptive action and retain valuable customers.

Machine learning techniques used

Algorithms like Gradient Boosting Trees, Random Forest, AdaBoost, and Support Vector Machines (SVM) are frequently used for churn prediction. These models excel at handling complex, non-linear relationships in customer data. They analyze historical patterns such as billing history, contract details, subscription tenure, and usage trends. Gradient Boosting, in particular, stands out for its ability to work with incomplete data and detect subtle behavioral signals that might go unnoticed with simpler models.

Tools and frameworks applied

Churn prediction projects typically rely on Python for building models, GitHub for version control, and cloud services like Amazon SageMaker or Amazon Redshift ML for scaling and deployment. A standout example comes from July 2021, when Jobcase - a platform with 110 million members - implemented Amazon Redshift ML to supercharge their recommendation and retention systems. Their in-database ML setup processed billions of predictions daily, reducing prediction times from 4–5 hours to mere minutes. This upgrade also enabled them to test entire datasets in under a week, a task that previously took 1–2 months. The efficiency gains highlight the power of pairing robust tools with well-designed ML pipelines.

Measurable business impact

Within just four weeks, Jobcase achieved a 5% improvement in engagement metrics while slashing prediction times from hours to minutes and cutting testing durations from months to weeks. Remarkably, these advancements came without increasing infrastructure costs. For professionals, showcasing similar achievements with an AI resume builder can make a strong impression. Highlight specific metrics like retention improvements, cost savings, or revenue preserved to demonstrate the real-world impact of your ML expertise. Recruiters are drawn to tangible results that clearly connect technical work to business outcomes.

2. Fraud Detection for Financial Transactions

Business problem addressed

Every year, financial institutions face massive losses due to fraudulent transactions, identity theft, and unauthorized account access. Traditional rule-based systems often fail to keep up with the ever-changing tactics of fraudsters, leading to a frustrating number of false positives for legitimate customers. The real challenge is spotting suspicious activity in real time without interrupting legitimate transactions. Machine learning steps in here, analyzing transaction patterns, user behavior, and network relationships to detect anomalies before any damage is done. This approach not only safeguards company revenue but also helps maintain customer trust. To tackle these evolving threats, advanced ML techniques are a must for real-time fraud detection.

Machine learning techniques used

Modern fraud detection relies on adaptive models that continuously learn new fraud patterns, removing the need for frequent manual updates. Anomaly detection algorithms mimic biological systems, flagging deviations from an account's usual "pattern of life" without requiring predefined fraud markers. Graph Neural Networks (GNNs) are particularly effective for uncovering complex fraud schemes, such as coordinated payment fraud rings or account-hopping tactics, by analyzing relationships between accounts. Supervised learning models like Logistic Regression, Decision Trees, and Random Forests classify transactions based on historical data, while ensemble methods like XGBoost and AdaBoost handle more intricate patterns and relationships.

Tools and frameworks applied

Python serves as the backbone for developing fraud detection systems, often paired with cloud platforms like Amazon SageMaker for managing the entire model lifecycle and enabling serverless training. Companies like Stripe rely on specialized tools like Stripe Radar for real-time fraud prevention. Additionally, Amazon Redshift ML allows for in-database predictions using SQL, capable of processing billions of records in minutes. Graph-based models are increasingly integrated into these systems to identify sophisticated fraud patterns that traditional supervised models might overlook. Together, these tools not only enhance fraud detection capabilities but also deliver measurable financial benefits.

Measurable business impact

The implementation of advanced machine learning models for fraud detection translates into immediate cost savings by stopping fraudulent transactions before they are completed. Beyond financial protection, these systems enhance the customer experience by minimizing false positives, ensuring legitimate transactions go through without unnecessary delays. By automating anomaly detection, security teams can shift their focus to high-priority investigations rather than manual transaction reviews, boosting overall efficiency. For your resume or portfolio, highlight specific achievements like reducing fraud losses by a certain percentage, improving false positive rates, or speeding up processing times. Demonstrating how your work connects technical expertise to real-world business results - like safeguarding revenue and improving customer satisfaction - can set you apart.

3. Product Recommendation Engine for E-Commerce

Business problem addressed

E-commerce platforms often struggle to help customers navigate massive product catalogs. When faced with too many choices, shoppers can feel overwhelmed, leading to abandoned carts and lost sales to competitors. A well-crafted recommendation engine addresses this by using data like browsing history, past purchases, and customer similarities to suggest relevant products. These systems don’t just enhance the shopping experience - they also open doors for effective cross-selling and up-selling. Additionally, they tackle the cold start problem, which involves recommending products to new users without any purchase history. Techniques like cross-domain learning help overcome this hurdle, pushing the boundaries of machine learning to improve product recommendations.

Machine learning techniques used

Several advanced machine learning techniques power modern recommendation engines:

- Market Basket Analysis: The Apriori algorithm identifies patterns in purchase behavior, uncovering frequent itemsets and association rules that guide product recommendations.

- Embedding-Based Retrieval (EBR): This method represents users and items as vectors in a high-dimensional space, enabling more relevant, context-aware suggestions. Pinterest, for instance, uses EBR for its Homefeed, combining offline approximate nearest neighbors to improve content retrieval and engagement.

- Foundation Models and Large Language Models (LLMs): These models are increasingly replacing traditional collaborative filtering methods, offering deeper and more personalized recommendations.

- Contextual Bandits: This technique optimizes real-time recommendations by adapting to users' immediate responses, ensuring more dynamic and personalized interactions.

These techniques are not just theoretical - they directly inform scalable implementations, as discussed in the tools section.

Tools and frameworks applied

To build these recommendation engines, developers often rely on Python alongside libraries like XGBoost, KNN, and Random Forest. Cloud platforms such as Amazon SageMaker streamline training and deployment processes. Additionally, Amazon Redshift ML allows for in-database predictions using SQL, making it easier to integrate machine learning into existing systems. Real-world examples include Dell Technologies partnering with Persado to create AI-driven personalized marketing content and Harley Davidson leveraging the AI platform "Albert" to analyze customer data and segment audiences effectively.

Measurable business impact

The results from implementing recommendation engines speak volumes. Harley Davidson saw a 40% increase in sales and an astounding 2,930% rise in leads, with half of those leads coming from lookalike audiences identified by their system. Meanwhile, Dell Technologies experienced a 22% boost in page visits and a 50% increase in click-through rates. For professionals working on recommendation engines, it's crucial to showcase these kinds of results on your resume. Highlight metrics like increased revenue from personalized recommendations, a higher average order value, or reduced cart abandonment rates. These numbers demonstrate not only your technical skills but also your ability to drive tangible business outcomes.

4. Dynamic Pricing or Sales Forecasting with Time Series

Business Problem Addressed

Businesses face a constant balancing act when it comes to pricing and inventory management. Set prices too high, and customers may walk away; set them too low, and you leave money on the table. Similarly, inaccurate sales forecasts can lead to stockouts or overstocked inventories - both of which frustrate customers and strain resources. This is where dynamic pricing and sales forecasting come into play.

Dynamic pricing leverages machine learning to adjust prices in real time based on factors like demand, supply, and market trends. Think about how Airbnb adjusts nightly rates or how airlines tweak ticket prices depending on booking patterns. Meanwhile, sales forecasting helps businesses predict future demand, aligning their supply chain with market needs. This reduces logistical costs and enhances customer satisfaction - key drivers for any successful operation. Together, these techniques provide a robust framework to tackle pricing and forecasting challenges.

Machine Learning Techniques Used

Time series forecasting relies on a range of machine learning methods to deliver accurate predictions. For instance, ARIMA (AutoRegressive Integrated Moving Average) is a classic approach used in applications such as stock market and weather forecasting. On the other hand, LSTM (Long Short-Term Memory) networks excel at identifying long-term patterns in sequential data. Uber, for example, uses a mix of LSTM networks and ARIMA models to predict ride demand, fine-tuning driver allocation and surge pricing strategies.

Dynamic pricing often employs ensemble methods like Random Forests and Gradient Boosted Trees (e.g., XGBoost, LightGBM) to analyze historical pricing data and predict optimal price points. Tools like Facebook's Prophet are particularly effective for managing multiple seasonal patterns and external factors like holidays. Advanced methods, such as Conformal Prediction and Bayesian Neural Networks, are also gaining traction, offering prediction intervals instead of single estimates - an invaluable feature when managing inventory under uncertain conditions. Mastering these techniques not only highlights technical expertise but also shows the ability to solve real-world business problems, a highly sought-after skill in the U.S. job market.

Tools and Frameworks Applied

Python remains the go-to language for implementing time series models, thanks to its rich ecosystem of libraries like Scikit-learn, XGBoost, and PyTorch. Cloud platforms such as Amazon SageMaker simplify the entire workflow, from training models to deploying them. Amazon Redshift ML, on the other hand, enables predictions directly within data warehouses, eliminating the need for costly data transfers.

Cloud-based MLOps frameworks further enhance scalability, ensuring models are well-managed, monitored, and maintainable in production environments. These tools also enforce code quality and auditability, making them indispensable for teams working at scale.

"The Model DevOps Framework, powered by AWS, creates a standardized approach to provide control, management, monitoring, and auditability of data models while enforcing code quality."

- Chris Coley, In-house Data Scientist, bp

By leveraging these tools, companies can streamline forecasting processes and gain a competitive edge in the market.

Measurable Business Impact

The financial benefits of time series forecasting and dynamic pricing are hard to ignore. Ford Motor Company, for instance, cut supply chain costs by 20% using demand prediction models. Google DeepMind slashed data center cooling energy usage by 40% through ML-based load forecasting. Lyft used real-time predictive models to reduce passenger wait times by 15%. And Airbnb’s dynamic pricing algorithms have boosted booking rates for hosts by 10–15%, all while keeping them competitive in the market.

When presenting these kinds of projects on your resume, focus on measurable results. Highlight metrics like improved forecast accuracy, revenue growth from optimized pricing, or cost savings from better inventory management. These numbers not only showcase your technical expertise but also underline your ability to drive tangible business outcomes.

5. Computer Vision for Image Classification or Defect Detection

Business Problem Addressed

Industries like manufacturing, healthcare, and retail face a common challenge: the need for fast and accurate anomaly detection. In manufacturing, missing a single defect in a component can lead to expensive recalls or equipment breakdowns. Healthcare organizations often lack enough specialists to review medical images for subtle signs of disease. Meanwhile, retail and media companies must maintain the visual quality of countless digital assets to keep users engaged.

Computer vision steps in by automating these inspections, ensuring consistent accuracy while reducing the workload on human inspectors. For instance, Boeing uses computer vision to analyze manufacturing images, leading to a 30% reduction in defects across their aerospace production lines by catching flaws that manual inspections missed. In healthcare, Google DeepMind's system for analyzing retinal images detects diabetic retinopathy with expert-level accuracy, enabling quicker screenings in underserved areas where specialists are scarce. These real-world applications highlight the precision and efficiency that computer vision brings to the table.

Machine Learning Techniques Used

At the heart of most image classification and defect detection tasks are Convolutional Neural Networks (CNNs). These models are exceptional at identifying spatial patterns, making them perfect for detecting manufacturing flaws or medical anomalies. For real-time needs, architectures like YOLO v8 and Detectron2 deliver both speed and accuracy, ideal for factory floor operations.

To reduce data requirements, transfer learning with pre-trained models such as ResNet or VGG is commonly employed. Handling imbalanced datasets often involves using techniques like Focal Loss. For better interpretability, methods like GRAD-CAM and LIME help visualize which parts of an image the model focuses on, ensuring it's identifying real defects rather than irrelevant details. A notable example is Google Health's AI system for breast cancer detection, which achieved a sensitivity of 94.6%, outperforming the 88.0% sensitivity of human radiologists in 2024. This demonstrates how well-trained CNNs can exceed manual analysis in accuracy.

Tools and Frameworks Applied

For basic image processing tasks like face detection, OpenCV is a reliable choice. When it comes to deep learning, TensorFlow and PyTorch are the go-to frameworks, offering powerful tools for building and training CNNs. Keras simplifies prototyping with its high-level API, while Gradio makes it easy to create interactive web interfaces for showcasing models.

In industrial applications, optimizing models is key. Converting them to formats like TensorRT or ONNX reduces latency, enabling real-time quality control on edge devices. Tesla's Autopilot system exemplifies this, using CNNs to process camera and sensor data for tasks like object detection and lane recognition. By optimizing computer vision models, companies can streamline operations and achieve measurable results.

Measurable Business Impact

These computer vision projects deliver clear benefits, from cost savings to efficiency improvements. Boeing's 30% reduction in defects directly lowers production costs and minimizes warranty claims. Google DeepMind's diabetic retinopathy detection system has sped up screenings for thousands of patients in areas with limited specialist availability. Similarly, GE Healthcare uses CNNs to analyze medical imaging like MRIs and X-rays, identifying subtle anomalies and significantly reducing diagnosis time.

When highlighting such projects on your resume, focus on quantifiable results. For example, mention accuracy improvements ("achieved 95% defect detection accuracy"), time savings ("reduced inspection time from 5 minutes to 30 seconds per unit"), or cost reductions ("cut false positives by 40%, saving $50,000 annually in rework costs"). These metrics not only demonstrate your technical expertise but also your ability to deliver tangible business value - an essential quality that resonates with U.S. employers. Showing how your work impacts the bottom line can make you stand out.

6. Natural Language Processing for Sentiment Analysis

Business Problem Addressed

Businesses generate an overwhelming amount of unstructured text data - think customer reviews, social media posts, support tickets, and surveys. Without automated tools, it’s nearly impossible to sift through this data to uncover insights. Marketing teams might miss which campaigns connect best with audiences. Customer service departments could overlook early signs of dissatisfaction. And product teams might fail to catch valuable feedback buried in thousands of comments. Sentiment analysis solves these problems by categorizing text as positive, negative, or neutral, allowing businesses to address customer opinions efficiently and at scale.

Take Dell Technologies as an example. By using natural language processing (NLP) to evaluate which words and phrases sparked positive emotional reactions, they improved their email marketing campaigns. The results? A 22% jump in average page visits and a 50% boost in click-through rates. Similarly, Harley Davidson adopted an AI-driven sentiment analysis system to segment customers and tailor their messaging. This led to a 40% increase in sales and an astonishing 2,930% surge in leads. These examples show how sentiment analysis transforms raw text into actionable business strategies.

Machine Learning Techniques Used

To tackle sentiment analysis, a variety of machine learning models come into play. Traditional models like Naive Bayes, Logistic Regression, and Random Forest often serve as starting points, with Random Forest achieving around 85% accuracy in many sentiment tasks. For more complex scenarios, deep learning models like Long Short-Term Memory networks (LSTMs) are used to capture the context in longer text passages. Among cutting-edge approaches, BERT (Bidirectional Encoder Representations from Transformers) has become the go-to model for context-aware sentiment analysis.

When dealing with social media, tools like VADER (Valence Aware Dictionary and sEntiment Reasoner) shine, as they handle informal text, emojis, and slang effectively. For even more granular insights, Aspect-Based Sentiment Analysis (ABSA) can pinpoint opinions about specific product features. Mastering these advanced techniques not only enhances your skill set but also helps you stand out in a competitive job market.

Tools and Frameworks Applied

For preprocessing tasks like tokenization, lemmatization, and stop-word removal, libraries such as NLTK and spaCy are excellent choices. To transform text into numerical data, vectorization methods like TF-IDF or Bag of Words are commonly used. When building deep learning models, frameworks like TensorFlow, PyTorch, and Keras provide powerful tools for training LSTMs or Transformer-based models. Pre-trained models, such as BERT or the Universal Sentence Encoder (USE) from Hugging Face's Transformers library, can save time while maintaining high levels of accuracy.

For traditional machine learning workflows, Scikit-learn remains a reliable option for implementing classification algorithms and evaluating model performance. Sentiment analysis models can also be deployed in real-time applications, like chatbots that adjust responses based on customer sentiment or systems that trigger alerts for negative feedback spikes. Microsoft, for instance, has successfully used these integrations in healthcare to lower hospital readmission rates. These tools and frameworks connect the dots between technical execution and tangible business value.

Measurable Business Impact

When showcasing sentiment analysis projects on your resume, focus on the results. Highlight metrics like a 50% improvement in click-through rates or a significant increase in sales. Demonstrating how your technical expertise translates into real-world business outcomes can make your profile stand out to potential employers.

7. Customer Segmentation with Unsupervised Learning

Business Problem Addressed

Many companies face the challenge of treating all customers the same, sending out identical marketing messages without considering individual preferences or behaviors. This one-size-fits-all approach not only wastes marketing budgets but also misses the chance to engage customers on a more personal level. Unsupervised learning steps in to solve this by identifying natural groupings among customers - whether based on purchasing habits, browsing behavior, or product preferences. These insights allow businesses to craft targeted strategies instead of blanketing everyone with the same message, all without requiring pre-labeled data.

For example, a major automotive retailer used unsupervised learning to create tailored promotions, significantly boosting customer engagement. This kind of segmentation not only solves a pressing business problem but also highlights technical skills that translate into real-world growth, making it a valuable project for your AI-optimized resume.

Machine Learning Techniques Used

K-means clustering is a go-to algorithm for customer segmentation. It works by grouping customers into clusters based on shared attributes, such as transaction history, session duration, or purchase frequency. The algorithm minimizes the distance between data points within a cluster and their central point. For unstructured data like customer reviews or social media posts, topic modeling techniques uncover dominant themes and interests that define customer segments.

When showcasing segmentation projects, it's crucial to outline the full workflow: data cleaning, feature extraction, determining the optimal number of clusters (using methods like the Elbow Method), and connecting the results to actionable business strategies. For more complex datasets, HDBSCAN can identify clusters of varying densities, which is especially helpful when customer groups differ significantly in size or behavior.

Tools and Frameworks Applied

For implementing K-means clustering and dimensionality reduction techniques like Principal Component Analysis (PCA), Scikit-learn is the industry standard, offering user-friendly APIs. When handling unstructured text data, transformer-based frameworks like BERT, RoBERTa, and the Universal Sentence Encoder (USE) are invaluable. These tools convert text into semantic embeddings, making it easier to cluster and analyze.

Visualization tools like UMAP and PCA help reduce high-dimensional data into more interpretable formats, making cluster patterns clearer. For large-scale datasets, Faiss (Facebook AI Similarity Search) is a powerful tool for efficient similarity searches and clustering. Cloud platforms like Amazon SageMaker provide a managed environment for building, training, and deploying segmentation models at scale. Companies such as Walmart use similar techniques to analyze in-store behavior, segment customers based on movement and purchasing patterns, and optimize store layouts for improved customer flow.

Measurable Business Impact

Unsupervised learning transforms raw customer data into actionable insights, delivering measurable business outcomes. When adding segmentation projects to your resume, tie your technical work to real-world results. For instance, Twitter (now X) uses real-time keyword clustering to identify trends and group users by shared interests, enabling more relevant ad targeting and content recommendations. This demonstrates how unsupervised learning can drive business value by turning technical expertise into practical solutions. Projects like these highlight how machine learning can create a direct and meaningful impact across industries.

8. Predictive Maintenance for IoT and Sensor Data

Business Problem Addressed

In industries like aviation, energy, and manufacturing, equipment failures can lead to massive financial losses and serious safety risks. Imagine a jet engine malfunctioning mid-flight or an oil well sensor failing - such events disrupt operations and can have far-reaching consequences. Traditional maintenance approaches fall short: reactive maintenance waits for breakdowns, while scheduled maintenance often replaces parts too early. Predictive maintenance, powered by IoT sensor data, offers a smarter solution by pinpointing potential failures before they happen, allowing businesses to switch from reactive fixes to proactive strategies.

Take General Electric as an example. By integrating predictive maintenance into its Industrial Internet of Things (IIoT) platform, GE uses machine learning to process data from sensors embedded in machinery. The system identifies anomalies and patterns in real time, cutting down on unplanned downtime and extending the life of critical equipment across sectors like aviation and energy. This approach not only prevents costly failures but also ensures resources are used more efficiently - no more replacing parts unnecessarily or dealing with the fallout of unexpected shutdowns. It's a practical demonstration of how machine learning can transform operations, similar to its success in improving customer engagement and fraud detection.

Machine Learning Techniques Used

Predictive maintenance relies on a mix of advanced machine learning techniques:

- Anomaly Detection: This identifies unusual patterns in sensor data, flagging deviations from normal behavior. For instance, Tesla uses such methods in its battery management systems to monitor and optimize battery performance at the cell level.

- Time-Series Forecasting: Algorithms analyze historical data to predict when equipment might fail. This is particularly useful for scheduling maintenance before issues arise.

- Bayesian Optimization: In high-precision manufacturing, this technique fine-tunes processes to enhance output quality.

- Convolutional Neural Networks (CNNs): These are used to process visual data, like images from inspection cameras, to detect material defects or abnormalities.

By combining these techniques, models stay updated, adapting to changes in equipment performance over time and improving their predictions as more data becomes available.

Tools and Frameworks Applied

A range of tools and frameworks brings predictive maintenance to life:

- Amazon SageMaker: This platform simplifies the process of building, training, and deploying machine learning models. For example, bp developed its Model DevOps Framework on SageMaker to handle well sensor data and wind turbine algorithms. Over nine months (October 2020 to July 2021), the team, led by Chris Coley, created a standardized system for managing data models.

"The Model DevOps Framework, powered by AWS, creates a standardized approach to provide control, management, monitoring, and auditability of data models while enforcing code quality." - Chris Coley, In-house Data Scientist and Product Owner, bp

- Industrial IoT Platforms: GE's IIoT system gathers and processes sensor data from machinery worldwide, feeding it into machine learning models for real-time analysis.

- Infrastructure as Code (IaC): This manages cloud resources, ensuring scalability for large-scale data processing.

- Serverless Environments: These reduce costs by charging only for the computing power used during model training.

These tools bridge the gap between cutting-edge machine learning and practical, business-focused outcomes.

Measurable Business Impact

The financial benefits of predictive maintenance are both clear and significant. Siemens, for instance, slashed maintenance costs by 30% through real-time analytics of sensor data. bp's Model DevOps Framework is on track to deliver 5%–25% cost savings in solution design and provisioning. Google DeepMind applied similar principles to data center management, cutting cooling energy usage by up to 40% through machine learning forecasts.

When showcasing your predictive maintenance experience, tie technical achievements to real-world business outcomes. Detail how much downtime you reduced, the dollar amount saved in maintenance, or the increase in equipment lifespan. Highlight the scale of your work - whether it involved processing millions of sensor readings, managing hundreds of devices, or analyzing terabytes of historical data. These concrete results prove your machine learning expertise can deliver measurable value in industries reliant on physical infrastructure and equipment.

9. End-to-End ML Pipeline and MLOps Deployment

Business Problem Addressed

One of the biggest hurdles for data science teams is moving models from notebooks to production. Without a streamlined process, each deployment becomes a time-consuming, custom-built effort. Data scientists often find themselves bogged down by weeks of infrastructure setup, manual cloud provisioning, and lengthy security checks. This chaotic approach results in inconsistent monitoring, delayed updates, and, ultimately, underutilized models. The opportunity to scale machine learning effectively slips through the cracks.

BP encountered this exact challenge across its global operations. To tackle it, the company collaborated with AWS Professional Services to create a Model DevOps Framework. This framework introduced automation for infrastructure provisioning, built-in security protocols, and tools to monitor data drift - when production data starts differing from training data. With this system in place, BP's data teams could focus on refining models and running experiments instead of wrestling with cloud setups. The solution not only streamlined their workflows but also set the stage for scalable, efficient machine learning deployment.

Machine Learning Techniques Used

A successful MLOps pipeline isn’t built on a single technique but rather a combination of interconnected tools and processes. Here’s how BP’s pipeline worked:

- Infrastructure as Code (IaC): Automated the setup of serverless environments, ensuring consistent and secure project infrastructure.

- Automated Monitoring: Kept an eye on model performance in real-time, flagging issues like prediction degradation caused by data drift.

- Serverless Training: Reduced cloud costs by activating compute resources only when needed and shutting them down immediately after.

- Data Virtualization: Enabled models to directly access centralized data hubs, eliminating the need for manual data transfers.

These components worked together to create a seamless pipeline where models transitioned smoothly from development to production, all while maintaining top-tier quality and security.

Tools and Frameworks Applied

BP’s Model DevOps Framework was built around Amazon SageMaker, a fully managed service for model building, training, and deployment. With its serverless architecture, data scientists could set up their environments independently, bypassing IT bottlenecks. Other key tools included:

- AWS Lambda for executing tasks on-demand.

- Amazon EC2 and RDS for robust compute and storage solutions.

- Integration with BP’s centralized Data Hub for automated data handling.

- CI/CD pipelines to enable quick testing and deployment of new models.

This cohesive setup allowed BP to standardize its ML processes, ensuring control, monitoring, and auditability while improving code quality.

"The Model DevOps Framework, powered by AWS, creates a standardized approach to provide control, management, monitoring, and auditability of data models while enforcing code quality." – Chris Coley, In-house Data Scientist and Product Owner, BP

"By having on-demand compute management facility, Amazon SageMaker reinforces cloud resource optimization seamlessly for our data professionals." – Abeth Go, Vice President for Data & Analytics Platforms, BP

Measurable Business Impact

BP’s Model DevOps Framework was rolled out in just 9 months, delivering measurable results across multiple fronts. The company achieved 5%–25% cost savings and drastically reduced the time needed for security reviews from months to weeks. Engineers saved countless hours previously spent on infrastructure design, allowing data scientists to dedicate more time to refining models and exploring new ideas.

The framework also scaled deployments faster and introduced real-time monitoring systems that caught performance issues before they could impact operations. This efficient, standardized approach not only reduced costs but also empowered teams to deliver more impactful machine learning solutions at scale. It’s a prime example of how robust MLOps practices can transform an organization’s ability to leverage data science effectively.

10. Explainable AI in High-Stakes Domains

Business Problem Addressed

In critical areas like loan approvals, medical diagnoses, and risk assessments, understanding the reasoning behind machine learning (ML) models is a must. Stakeholders - including regulators, customers, and internal teams - demand transparency to ensure decisions are fair, accurate, and compliant with legal standards. For example, financial institutions are legally obligated to explain credit decisions, healthcare providers need to confirm diagnostic tools are focusing on relevant data, and insurance companies must justify their risk assessments to both regulators and policyholders.

Zest AI tackled this issue head-on in credit underwriting. Their explainable ML models analyzed over 1,600 variables to evaluate loan applications. What set their approach apart? They made the decision-making process transparent, showing exactly which factors influenced each outcome. This allowed lenders to comply with regulatory requirements while achieving 10–20% higher approval rates without increasing risk. By ensuring decisions were free from bias and irrelevant data, Zest AI built trust with regulators and applicants alike. In high-stakes fields, this kind of transparency has become just as crucial as the accuracy of the ML models themselves.

Machine Learning Techniques Used

Explainable AI relies on methods that make a model's decision-making process more understandable. Here are a few key techniques:

- LIME (Local Interpretable Model-agnostic Explanations): Simplifies complex models by approximating them locally with interpretable ones, making decisions easier to explain.

- GRAD-CAM: In computer vision, this technique generates heatmaps to show which parts of an image influenced a classification.

- Feature Importance Rankings: Highlights which variables have the most influence on predictions.

- Uncertainty Quantification: Methods like Conformal Prediction provide confidence intervals instead of single-point predictions, helping users gauge the reliability of outputs.

These techniques not only demystify how models work but also make them more practical for real-world applications.

Tools and Frameworks Applied

Several tools and frameworks are paving the way for explainable AI:

- SHAP (SHapley Additive exPlanations): A widely used tool for feature attribution, offering mathematically sound insights into model predictions.

- IBM Watson: In oncology, Watson uses explainable ML to rank treatment options, giving clinicians the ability to validate recommendations and spot overlooked alternatives.

- LinkedIn: The platform developed an explainable recommendation system to predict customer churn and identify upsell opportunities. By making the reasoning behind flagged leads clear, sales teams could act with confidence.

Explainable AI is becoming a must-have skill for ML professionals. Platforms like scale.jobs help candidates showcase their expertise in building transparent systems, alongside other advanced ML projects.

Measurable Business Impact

The benefits of explainable AI go beyond compliance and trust - they drive tangible results. For instance:

- Zest AI's transparent credit scoring: Enabled lenders to approve more qualified applicants while maintaining risk levels, directly boosting revenue.

- Healthcare predictive models: Reduced hospital readmission rates by over 15%, as clinicians could pinpoint and address specific risk factors.

- Manufacturing defect detection: Facilities using GRAD-CAM explanations improved their defect detection rates from 85% to 96%, as engineers could verify the system was identifying legitimate issues.

"Explainable AI is transforming how organizations operate, compete, and deliver value at scale by moving from mere predictive algorithms to a more data-centric, transparent discipline." – DigitalDefynd

When domain experts can see and verify why AI systems make certain decisions, trust grows. This trust not only accelerates adoption but also ensures that accurate predictions translate into meaningful business outcomes.

How scale.jobs Helps You Build a Better Machine Learning Resume

Your machine learning (ML) case studies showcase your skills and achievements, and your resume should do the same. Turning those case studies into a polished, ATS-friendly resume can take hours, but scale.jobs simplifies the process using free job application tools with AI-powered structured data extraction. In just one click, it organizes technical details with precision.

This efficient approach sets the stage for other features that make scale.jobs stand out. The platform’s AI transforms passive descriptions into action-packed bullet points that highlight measurable results and professional formatting. For instance, upload a case study on fraud detection, and the system might convert "Responsible for building a model that detected fraudulent transactions" into a concise, results-driven statement emphasizing performance improvements and tangible outcomes.

But scale.jobs doesn’t stop at automating resume creation. Through collaborations and added services, it provides broader support. In July 2021, AgentScale AI (the tech behind scale.jobs) teamed up with SELF to introduce an AI Resume Maker. This tool drastically cut down the time needed to craft resumes and has since helped recent graduates, laid-off professionals, and international candidates by automatically removing sensitive details and generating job-ready documents.

"I'm incredibly grateful to Jet for creating the AI Resume Maker! The mobile accessibility is a game-changer... His proactive approach... gave us the confidence that this development was not only possible but achievable." – Wei Ching, SELF

For those seeking additional assistance, scale.jobs offers Human Assistant services at a flat rate of $199 for 250 applications. This service includes custom, ATS-optimized resumes and cover letters delivered within 24 hours. You’ll also receive real-time updates via WhatsApp and proof-of-work screenshots, saving you over 20 hours each week - time better spent on networking and interviews.

Whether you’re just starting out or have years of experience, scale.jobs accepts a variety of file formats (Word, PDF, images) and transforms them into polished, job-ready resumes. It eliminates unnecessary jargon and ensures your ML projects are presented with clarity and impact.

"AgentScale AI goes beyond automation - we guarantee accuracy, enforce structured outputs, and ensure every AI-generated resume meets professional hiring standards." – AgentScale AI

Conclusion

Machine learning achievements in real-world projects often serve as the bridge between technical know-how and career advancement. By showcasing case studies that highlight measurable results - like boosting customer retention or cutting down on fraud - you’re proving your ability to make a tangible business impact. For example, BP's Model DevOps Framework [1] delivered cost savings of 5%–25% while ensuring standardized code quality - results that hiring managers find hard to ignore.

But standing out in a competitive job market takes more than just strong results; it requires a resume that grabs attention. Many job seekers spend countless hours tweaking resumes to meet keyword and formatting requirements. scale.jobs simplifies that process with AI-powered tools that transform your ML projects into polished, impactful bullet points in just one click. For those seeking extra help, their Human Assistant service offers comprehensive support for $199.

Whether you're a recent graduate showcasing your first computer vision project or a seasoned professional with extensive MLOps experience, your case studies need to reflect a complete skill set. Chris Coley, In-house Data Scientist and Product Owner at BP, underscored this when describing their framework:

"The Model DevOps Framework... creates a standardized approach to provide control, management, monitoring, and auditability of data models while enforcing code quality."

This kind of detail - proving you can take machine learning models from concept to production - sets exceptional candidates apart from the crowd.

Your ML case studies are your most powerful asset in advancing your career. They deserve a resume that does them justice. With scale.jobs' free ATS checker, AI-driven customization tools, and optional human-powered services, you can spend less time on formatting and more time connecting with opportunities. Start crafting your standout ML resume today at scale.jobs and let your work speak for itself.

[1] AWS Customer Stories, 2022

FAQs

How can machine learning projects make my resume stand out?

Including machine learning projects on your resume is a powerful way to demonstrate your ability to tackle challenges using data. Recruiters look for candidates who can showcase technical expertise, problem-solving skills, and tangible results. To make your projects stand out, focus on the business problem you addressed, the ML techniques you used (like classification, time-series forecasting, or computer vision), and the tools or frameworks involved (such as Python, TensorFlow, or cloud-based MLOps platforms). Most importantly, highlight the impact of your work - whether it’s cost reduction, improved efficiency, or process automation.

For instance, if you developed a predictive model to optimize inventory, you might emphasize how it reduced waste and boosted operational efficiency. Or, if you crafted an AI-driven tool to automate a repetitive task, you could point out how it saved significant time and resources. Clearly outlining the problem, your approach, and the results not only shows your technical proficiency but also underscores the value you bring to a business - qualities that both hiring managers and applicant tracking systems (ATS) actively seek.

What are the key tools needed for successfully deploying machine learning models?

Deploying machine learning models successfully involves using a mix of tools, each tailored to specific stages of the process. For instance, container platforms like Kubernetes are essential for managing and scaling workloads, while workflow orchestration tools such as Kubeflow Pipelines or Prefect help automate and manage complex workflows. When it comes to serving models, frameworks like TensorFlow Serving, BentoML, or Seldon Core are commonly used.

To keep track of model versions and experiments, experiment tracking systems like MLflow or Neptune come into play. Once the models are in production, maintaining their performance and reliability becomes critical, which is where monitoring tools like Prometheus and Grafana prove invaluable. Together, these tools create a cohesive system that simplifies deployment, ensures scalability, and keeps models performing at their best over time.

How does scale.jobs ensure your resume is optimized for ATS systems?

Scale.jobs features an AI-driven resume builder designed to make your resume stand out in Applicant Tracking Systems (ATS). It fine-tunes keywords, adjusts formatting, and organizes the structure to meet ATS standards, ensuring your resume is tailored to the job and optimized for better visibility.